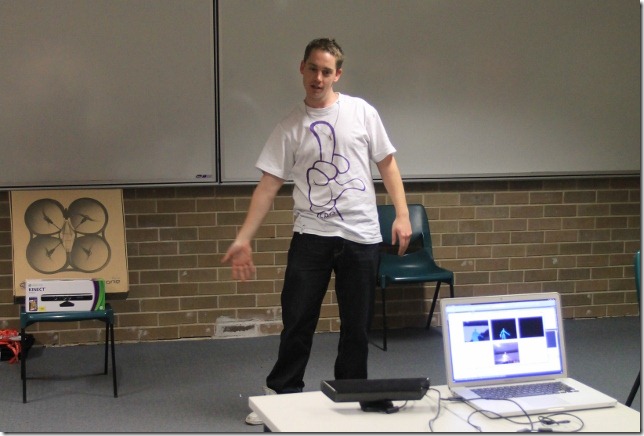

Yesterday I headed down to Sydney to be a mentor/trainer for the Developer Developer Developer Kinect SDK Funday in Sydney. Lewis had organised a bunch of Kinects and some a cool prize for the winning application. A bunch of people turned up and we gave them a really short introduction session to the Kinect SDK before they self-organised themselves into groups and set off building for the day.

Yesterday I headed down to Sydney to be a mentor/trainer for the Developer Developer Developer Kinect SDK Funday in Sydney. Lewis had organised a bunch of Kinects and some a cool prize for the winning application. A bunch of people turned up and we gave them a really short introduction session to the Kinect SDK before they self-organised themselves into groups and set off building for the day.

I was impressed by the low number of questions and the fact people seemed to be busily working away meaning it must have been pretty self explanatory. A few questions came up multiple times so I thought I’d share them here.

“I bought a Kinect bundled with XBox – how do I use it on my laptop?” – I was lucky to have bought a standalone Kinect but if you bought the xbox bundle you’ll need to get a power supply cord. I found one this morning on the Microsoft site:

“Where do I get finger information from?” – The skeletal tracking doesn’t currently track fingers. If you want to do this you need to either use another SDK or “roll-your-own” solution from on of the other data streams – maybe depth info + hand tracking?

“The audio speech recognition matches to random things – how to I stop that?” – In the SpeechRecognizedEventArgs there is a Result.Confidence property that you can use to set your own threshold of what you want to do with a “matched” item. I’d suggest if it’s only 30% confident you probably want to ignore the result ![]()

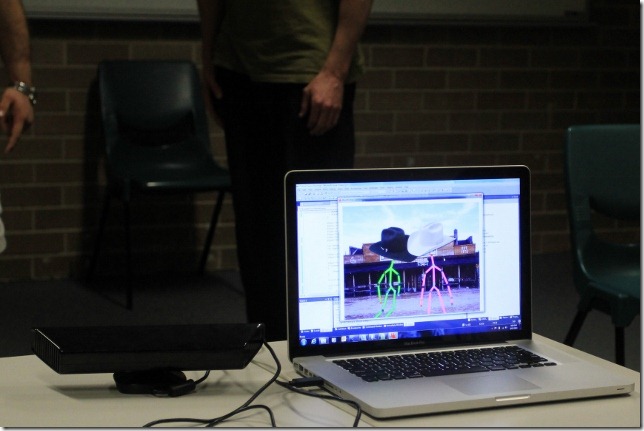

We finished up the day with the group showing off what they’d built in a few hours. Here’s some pictures of just a few of the solutions. The winners of the prize did a gunslinger app – where the players skeleton wore a cowboy hat and you competed to see who was the fastest draw.

There was a human tetris application where you had to mould your body to fit through the shape approaching you.

A jukebox controller for media centre allowing clapping to turn on, arm waving to skip etc.

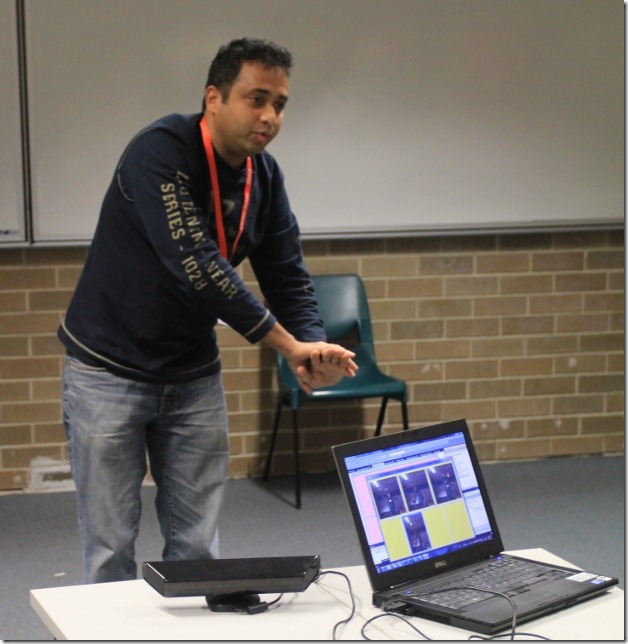

Minority-Report style video feeds that could be moved to different parts of the screen.