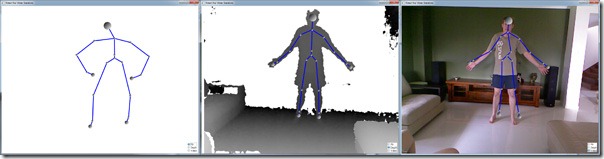

The most asked for snippet of code at our recent workshop that I didn’t have was how to properly align a Kinect skeleton to either the Video image or the depth image. It turns out this is non trivial and most samples that just scale all three items to fit the screen are wrong. I took the time this weekend to make a good sample for others to follow. If you just want the two snippets scroll to the very bottom of the article otherwise continue reading for a full walkthrough, it starts with Kinect Beta2 clean WPF code.

The most asked for snippet of code at our recent workshop that I didn’t have was how to properly align a Kinect skeleton to either the Video image or the depth image. It turns out this is non trivial and most samples that just scale all three items to fit the screen are wrong. I took the time this weekend to make a good sample for others to follow. If you just want the two snippets scroll to the very bottom of the article otherwise continue reading for a full walkthrough, it starts with Kinect Beta2 clean WPF code.

The two key methods we are going to use are:

Microsoft.Research.Kinect.Nui.SkeletonEngine.SkeletonToDepthImage(Microsoft.Research.Kinect.Nui.Vector, out float, out float) Microsoft.Research.Kinect.Nui.Camera.GetColorPixelCoordinatesFromDepthPixel(Microsoft.Research.Kinect.Nui.ImageResolution, Microsoft.Research.Kinect.Nui.ImageViewArea, int, int, short, out int, out int)

To make this super easy for you to drop into your project I’m going to put this scaling into my skeleton control and let you choose by setting a mode. In order to scale to the depth image or the video image you need to do up to 3 things:

- Set the ScaleMode property to ToFill, ToDepth, ToVideo

- Set reference to the Kinect Runtime being used, set property Runtime.

- (Video only) We need the Depth PlanerImage, pass into method DepthImage.

For example let use WPF binding to do this and switch using a radio button. Here is the XAML:

<Window x:Class="VideoSkeleton.MainWindow" xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml" xmlns:Skeleton="clr-namespace:SoulSolutions.Kinect.Controls.Skeleton;assembly=SoulSolutions.Kinect.Controls.Skeleton" xmlns:VideoSkeleton="clr-namespace:VideoSkeleton" Title="Kinect Nui Video Skeletons" WindowStartupLocation="CenterScreen" SizeToContent="WidthAndHeight"> <Window.Resources> <VideoSkeleton:EnumConverter x:Key="EnumConverter" /> </Window.Resources> <Grid x:Name="layoutRoot" Width="1024" Height="768"> <Image x:Name="screenImage" /> <Skeleton:SkeletonControl x:Name="skeleton" ScaleMode="{Binding ScaleMode, Mode=TwoWay}" Runtime="{Binding Nui}" BoneFill="Blue" /> <StackPanel Orientation="Vertical" HorizontalAlignment="Right" VerticalAlignment="Bottom" Background="White" Margin="8,8,8,8"> <RadioButton Content="Fill" IsChecked="{Binding ElementName=skeleton, Path=ScaleMode, Converter={StaticResource EnumConverter}, Mode=TwoWay, ConverterParameter=ToFill}" /> <RadioButton Content="Depth" IsChecked="{Binding ElementName=skeleton, Path=ScaleMode, Converter={StaticResource EnumConverter}, Mode=TwoWay, ConverterParameter=ToDepth}" /> <RadioButton Content="Video" IsChecked="{Binding ElementName=skeleton, Path=ScaleMode, Converter={StaticResource EnumConverter}, Mode=TwoWay, ConverterParameter=ToVideo}" /> </StackPanel> </Grid> </Window>

The “screenImage” we will set in code to be the depth or vide image, the “skeleton” is the control, more on how it works below, and the radio buttons use element binding to set the ScaleMode (a simple EnumConverter makes the binding magic work).

The code behind (XAML.cs) looks like this:

using System; using System.ComponentModel; using System.Linq; using System.Windows; using Coding4Fun.Kinect.Wpf; using Microsoft.Research.Kinect.Nui; using SoulSolutions.Kinect.Controls.Skeleton; namespace VideoSkeleton { /// <summary> /// Interaction logic for MainWindow.xaml /// </summary> public partial class MainWindow : Window, INotifyPropertyChanged { private InteropBitmapHelper imageHelper; private Runtime nui; public Runtime Nui { get { return nui; } set { if (nui != null) { //dispose all events and unintialise nui.VideoFrameReady -= NuiVideoFrameReady; nui.DepthFrameReady -= NuiDepthFrameReady; nui.SkeletonFrameReady -= NuiSkeletonFrameReady; nui.Uninitialize(); } nui = value; if (nui != null) { //wire up events and initialise //step 1 events nui.VideoFrameReady += NuiVideoFrameReady; nui.DepthFrameReady += NuiDepthFrameReady; nui.SkeletonFrameReady += NuiSkeletonFrameReady; //step 2 initialise nui.Initialize(RuntimeOptions.UseColor | RuntimeOptions.UseDepth | RuntimeOptions.UseSkeletalTracking); //step 3 open streams / settings nui.VideoStream.Open(ImageStreamType.Video, 2, ImageResolution.Resolution640x480, ImageType.Color); nui.DepthStream.Open(ImageStreamType.Depth, 2, ImageResolution.Resolution640x480, ImageType.Depth); //Must set to true and set after call to Initialize nui.SkeletonEngine.TransformSmooth = true; } OnPropertyChanged("Nui"); } } private SkeletonScaleMode scaleMode; public SkeletonScaleMode ScaleMode { get { return scaleMode; } set { scaleMode = value; //clean up background images screenImage.Source = null; imageHelper = null; OnPropertyChanged("ScaleMode"); } } private void NuiDepthFrameReady(object sender, ImageFrameReadyEventArgs e) { skeleton.DepthImage = e.ImageFrame.Image; if (ScaleMode != SkeletonScaleMode.ToDepth) return; //Use Coding4Fun extension method on ImageFrame class for Depth screenImage.Source = e.ImageFrame.ToBitmapSource(); } void NuiSkeletonFrameReady(object sender, SkeletonFrameReadyEventArgs e) { if (e.SkeletonFrame == null) return; SkeletonFrame allSkeletons = e.SkeletonFrame; //get the first tracked skeleton var skeletonData = (from s in allSkeletons.Skeletons where s.TrackingState == SkeletonTrackingState.Tracked select s).FirstOrDefault(); if (skeletonData == null) return; skeleton.SkeletonData = skeletonData; } void NuiVideoFrameReady(object sender, ImageFrameReadyEventArgs e) { if (ScaleMode != SkeletonScaleMode.ToVideo) return; PlanarImage planarImage = e.ImageFrame.Image; //An interopBitmap is a WPF construct that enables resetting the Bits of the image. //This is more efficient than doing a BitmapSource.Create call every frame. if (imageHelper == null) { imageHelper = new InteropBitmapHelper(planarImage.Width, planarImage.Height, planarImage.Bits); screenImage.Source = imageHelper.InteropBitmap; } else { imageHelper.UpdateBits(planarImage.Bits); } } public MainWindow() { InitializeComponent(); Closed += WindowClosed; Loaded += WindowLoaded; layoutRoot.DataContext = this; } private void WindowLoaded(object sender, RoutedEventArgs e) { Runtime.Kinects.StatusChanged += KinectsStatusChanged; Nui = Runtime.Kinects.Where(k => k.Status == KinectStatus.Connected).FirstOrDefault(); } void KinectsStatusChanged(object sender, StatusChangedEventArgs e) { //ignore the case where a 2nd kinect is connected while we are using the 1st kinect. if (Nui != null && Nui.InstanceName != e.KinectRuntime.InstanceName) return; Nui = Runtime.Kinects.Where(k => k.Status == KinectStatus.Connected).FirstOrDefault(); } void WindowClosed(object sender, EventArgs e) { Runtime.Kinects.StatusChanged -= KinectsStatusChanged; Nui = null; } public event PropertyChangedEventHandler PropertyChanged; private void OnPropertyChanged(string propertyName) { if (PropertyChanged != null) { PropertyChanged(this, new PropertyChangedEventArgs(propertyName)); } } } }

If you have read the Kinect Beta2 clean WPF code post this will look very familiar. The changes to make this work are:

INotifyPropertyChanged

Really simple trick to allow us to bind in XAML to properties in the code behind is to implement INotifyPropertyChanged and raise PropertyChanged events, this lets us wire up the Kinect Runtime and the ScaleMode properties with ease.

ScaleMode

This property is bound to the skeleton control’s property of the same name, we will use it to know what background image to show, nothing, video or depth.

NuiDepthFrameReady

Here we do two new things, first we pass the depth data to the skeleton control, it needs this to align the depth values to the colour vide image. Second we render the depth as the background only if we are in the Scale ToDepth mode.

NuiVideoFrameReady

Similar to the depth event handler we only render the video image if we are in the Scale ToVideo mode.

Changes made to the Skeleton Control to support this?

Things get a little more tricky here but with this good example I sure you’ll follow and be enhancing this for your project in no time. Firstly we’ve added three new properties:

Runtime, Here we need the Kinect Runtime so we can do the conversions.

ScaleMode, new enum this lets the user set what type of scaling they want.

DepthImage, you need to pass in the most recent Depth planer image available to let us convert depth to video.

All the magic happens in the old SetUiPosition method, a big switch statement applied the correct logic for each mode, let’s review each in turn:

ToFill

Scale the skeleton to best fit the size of the control. Use the Coding4Fun helper as usual

if (ActualWidth == 0 || ActualHeight == 0) return; var scaledJoint = joint.ScaleTo((int) ActualWidth, (int) ActualHeight, 1f, 1f); //center in control Canvas.SetLeft(ui, scaledJoint.Position.X - ui.ActualWidth/2); Canvas.SetTop(ui, scaledJoint.Position.Y - ui.ActualHeight/2);

ToDepth

Convert the vector to the corresponding depth image pixel then scale to fit the control.

if (Runtime == null || DepthImage == null) return; Runtime.SkeletonEngine.SkeletonToDepthImage(joint.Position, out depthX, out depthY); depthPixelX = (int) Math.Max(0, Math.Min(depthX * DepthImage.Value.Width, DepthImage.Value.Width-1)); depthPixelY = (int) Math.Max(0, Math.Min(depthY * DepthImage.Value.Height, DepthImage.Value.Height-1)); //scale to actual size of control Canvas.SetLeft(ui, depthPixelX * (ActualWidth / DepthImage.Value.Width)); Canvas.SetTop(ui, depthPixelY * (ActualHeight / DepthImage.Value.Height));

ToVideo

Convert the vector to the corresponding depth image pixel, then convert to the colour pixel, then scale to fit the control

if (Runtime == null || Runtime.VideoStream == null || DepthImage == null) return; Runtime.SkeletonEngine.SkeletonToDepthImage(joint.Position, out depthX, out depthY); depthPixelX = (int) Math.Max(0, Math.Min(depthX * DepthImage.Value.Width, DepthImage.Value.Width-1)); depthPixelY = (int) Math.Max(0, Math.Min(depthY * DepthImage.Value.Height, DepthImage.Value.Height-1)); int colourX; int colourY; var viewArea = new ImageViewArea { CenterX = 0, CenterY = 0, Zoom = ImageDigitalZoom.Zoom1x }; int index = (depthPixelY * DepthImage.Value.Width + depthPixelX) * 2; var depthValue = (short)(DepthImage.Value.Bits[index] | (DepthImage.Value.Bits[index + 1] << 8)); Runtime.NuiCamera.GetColorPixelCoordinatesFromDepthPixel(Runtime.VideoStream.Resolution, viewArea, depthPixelX, depthPixelY, depthValue, out colourX, out colourY); //bug in Kinect SDK? Seems to assume depth is 320x240, half of colour image if (DepthImage.Value.Width == 640) { colourX = colourX / 2; colourY = colourY / 2; } //scale to actual size of control Canvas.SetLeft(ui, colourX * (ActualWidth / Runtime.VideoStream.Width)); Canvas.SetTop(ui, colourY * (ActualHeight / Runtime.VideoStream.Height));

Comments

As you can see it is not as simple or as clean as you like, a simple extension method to the Joint would be ideal, instead we need access to the Runtime itself to get the depth pixel and a depth image frame to get the colour pixel. Lastly during testing I found that 640×480 video with 320×240 depth worked fine, but up the depth to 640×480 and the whole thing was off, hence the fix, weird. You may have noticed this all works with/without the playerindex being captured in the depth stream but even with that off it fails for colour image about 640×480. I don’t see why this shouldn’t work with 1280×1024 image but it doesn’t. Let’s hope this all gets addressed for the next beta but until then feel free to use the code from this article in any form.

Question / Comment? Catch me on http://twitter.com/soulsolutions